Ultimate Guide to Fine-Tuning in PyTorch : Part 2 — Improving Model Accuracy

This article is second part of Fine tuning Pytorch model series, with each part focusing on different aspects of fine-tuning models.

Find all the articles of this series here:

Outlines

- Introduction

- Data Specific Techniques

- Working with Hyper-parameters for Optimal Performance

- Model Ensemble

- Other Overlooked but Very Important Techniques

- Conclusion

Introduction

As a machine learning practitioner, you might often find yourself in a situation where you’re fine-tuning a pre-trained model for your specific task, but you reach a point where you can’t improve the model’s accuracy any further. In this article, we’ll explore various techniques and strategies that you can use to boost your model’s accuracy. These methods are designed to help you overcome the plateau and achieve even better results in your machine learning projects. Let’s dive in and discover how to take your model’s performance to the next level!

Data Specific Techniques

When fine-tuning a model, the data plays a crucial role in determining its effectiveness and accuracy. Therefore, having a thorough understanding of your data and making the right choices during training are of utmost importance. In this section, we will explore a couple of data-related techniques that can significantly improve your model’s accuracy.

Quality and Quantity of Data

To achieve the best results in fine-tuning, it is vital to have a dataset that is both diverse and representative. Your dataset should encompass a wide variety of scenarios and relevant examples related to your specific task. Remember, having more data typically improves model performance, so consider collecting or obtaining additional data if needed. However, it’s essential to strike a balance, as an excessively large dataset might not always result in better learning.

Be cautious about data skewness and ensure that the data is well-distributed to avoid biasing the model’s training. Finding the right balance between the quality and quantity of data will significantly contribute to the model predictive capabilities.

Data Preprocessing and Augmentation

Ensure you carefully prepare your data by cleaning and normalizing it. This means removing unusual values, filling in missing information, and putting the data into a consistent format. Additionally, you can use data augmentation techniques to expand your training set. Techniques like rotation, scaling, cropping, or flipping can add variety to your data, making the model more robust.

However, be cautious and select the right augmentation methods for your specific task. Some augmentations may not be suitable and could negatively impact model accuracy. By choosing the appropriate data preprocessing and augmentation approaches, you can optimize your model’s performance and achieve better results in your fine-tuning process.

Data Cleaning and Error Analysis

Perform thorough data cleaning and conduct error analysis during the fine-tuning process. Analyze misclassified examples or cases where the model performs poorly to identify patterns or biases in the data. This analysis can guide you in further data preprocessing, augmentation, or the creation of specific rules or heuristics to address problematic cases.

Batch Size and Gradient Accumulation

Experiment with different batch sizes during training. Smaller batch sizes can lead to more accurate results, but they may also slow down the training process. Additionally, if you have limited computational resources, you can use gradient accumulation to simulate a larger effective batch size by accumulating gradients over multiple smaller batches before performing weight updates.

Working with Hyper-parameters for Optimal Performance

Learning Rate Scheduling

Experiment with different learning rate schedules during fine-tuning. A common approach is to start with a relatively low learning rate and gradually increase it, allowing the model to converge to the fine-tuned task. Learning rate warm-up, where you gradually increase the learning rate at the beginning of training, can also be beneficial.

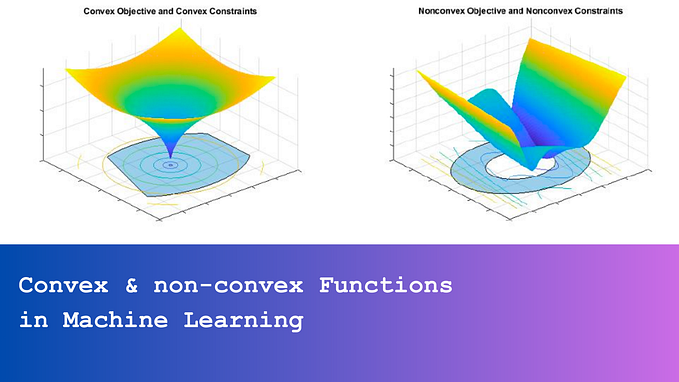

Regularization Techniques

Apply regularization techniques to prevent overfitting and improve generalization. Common techniques include dropout, L1 or L2 regularization, and early stopping. Regularization helps to control the complexity of the model and prevent it from memorizing the training set too well.

Evaluation and Hyperparameter Tuning

Regularly evaluate your model’s performance on a validation set during fine-tuning. Adjust hyperparameters such as learning rate, regularization strength, or optimizer parameters based on the validation results. Consider using techniques like grid search or random search to explore different hyperparameter combinations.

Model Ensemble

Consider using ensemble methods to improve accuracy. You can train multiple instances of the pre-trained model with different initializations or data subsets and combine their predictions for the final result. Ensemble methods often lead to improved generalization and robustness. You can employ the following techniques to improve model accuracy with the help of Model Ensembling:

- Voting Ensemble: Combine predictions from multiple fine-tuned models and take a majority vote for classification tasks or average the predictions for regression tasks. This simple approach can often lead to better overall performance by reducing model bias.

- Bagging (Bootstrap Aggregating): Train multiple instances of the same fine-tuned model on different subsets of the training data. This helps in reducing overfitting and improving model generalization.

- Stacking (Stacked Generalization): Train multiple diverse models, and then use another model (meta-learner) to combine their predictions. Stacking leverages the strengths of different models to create a more powerful ensemble.

- Different Architectures: Use diverse deep learning architectures for fine-tuning, such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), or Transformers. Each architecture may excel in capturing different patterns or features in the data.

- Use Different Hyperparameters: Fine-tune the models using different hyperparameter settings and ensemble their predictions. Hyperparameter diversity can lead to improved ensemble performance.

Remember, when implementing Model Ensemble techniques, it’s crucial to strike a balance between model diversity and complexity. Too many models or overly complex ensembles might lead to computational overhead and diminishing returns.

Other Overlooked but Very Important Techniques

Here are some additional suggestions that are often overlooked but can have a significant impact on improving the accuracy of fine-tuning a pre-trained model.

Choosing the Right Layers to Fine-Tune

Decide which layers of the pre-trained model to freeze and which to fine-tune. Typically, earlier layers capture more general features, while later layers capture more task-specific features. For better accuracy, you may consider fine-tuning more layers closer to the end of the network, especially if your new task is similar to the task the pre-trained model was originally trained on.

Transfer Learning Objective

Instead of directly fine-tuning the pre-trained model on your target task, consider using a transfer learning objective. This involves training an auxiliary task using the pre-trained model and then using the features learned from this task for your main task. The auxiliary task should be related to your main task but easier to solve, which can help the model learn more generalizable representations.

Model Size and Complexity

Depending on your dataset and task, the pre-trained model you’re using may be too large or complex. Large models tend to have more parameters, which can lead to overfitting when fine-tuning on smaller datasets. In such cases, consider using smaller variants of the pre-trained model or applying techniques like model pruning or distillation to reduce model complexity.

Fine-tuning Strategy

Rather than fine-tuning the entire pre-trained model, you can adopt a progressive unfreezing approach. Start by freezing all the layers and gradually unfreeze and fine-tune the layers in a staged manner. This allows for more stable training and prevents catastrophic forgetting of the pre-trained representations.

Domain-Specific Pre-training

If your target task is in a specific domain, consider pre-training the model on a large dataset from that domain before fine-tuning. This can help the model learn domain-specific features and improve its performance on the target task.

Loss Function Modification

Experiment with different loss functions that are tailored to your specific task or dataset. For example, if your dataset has class imbalance, you can use weighted or focal loss to give more importance to underrepresented classes. Alternatively, you can design a custom loss function that incorporates domain knowledge or specific objectives of your task.

Transfer Learning from Multiple Models

Instead of relying on a single pre-trained model, consider leveraging multiple pre-trained models for transfer learning. You can train each model on different tasks or datasets and then combine their representations or predictions during fine-tuning. This can help capture a broader range of features and improve accuracy.

These additional suggestions should help you fine-tune your pre-trained model more effectively and achieve better accuracy on your target task.

Remember that fine-tuning is an iterative process, and it often requires experimentation and adaptation based on the characteristics of your data and task.

Conclusion

In conclusion, we have explored a comprehensive set of techniques in this ultimate guide to fine-tuning in PyTorch, all aimed at enhancing model accuracy. By focusing on key aspects such as data quality and quantity, data preprocessing, and augmentation, we lay the foundation for improved performance. Additionally, through data cleaning and error analysis, we can fine-tune our models to make more accurate predictions.

Furthermore, we examined various strategies like batch size and gradient accumulation, learning rate scheduling, and regularization techniques to optimize the training process. The importance of evaluating and tuning hyperparameters, along with leveraging model ensembles and transfer learning from multiple models, were also discussed. Finally, we acknowledged the significance of domain-specific pre-training, fine-tuning strategies, and modifying loss functions to fine-tune our models effectively.

By incorporating these techniques into our PyTorch workflows, we can create powerful models with enhanced accuracy, capable of tackling real-world challenges across diverse domains. Let this guide serve as a valuable resource to elevate your fine-tuning capabilities and achieve remarkable results in your machine learning projects.

Further readings

Share your thoughts

Thank you for reading this far, I am eager to hear your thoughts, comments, and suggestions on how we can further enhance this article. Your feedback is invaluable to me, as it will not only help improve the current content but also shape the direction of Part 2, where we will explore advanced techniques to boost the accuracy of our fine-tuned models.